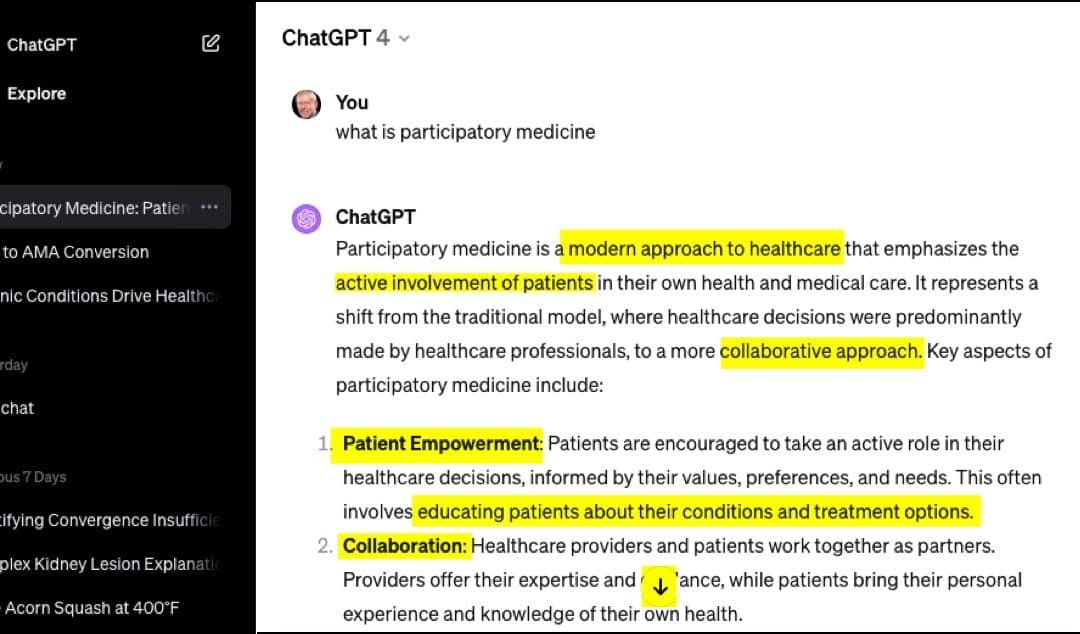

I’m working on lots of things about generative AI in healthcare, because among other things, “GenAI” is incredibly empowering and liberating for e-patients. For kicks I decided to ask GPT-4 what it thinks participatory medicine is. Here’s its answer. Couldn’t have said it better myself!

Prompt: What is participatory medicine?

Participatory medicine is a modern approach to healthcare that emphasizes the active involvement of patients in their own health and medical care. It represents a shift from the traditional model, where healthcare decisions were predominantly made by healthcare professionals, to a more collaborative approach. Key aspects of participatory medicine include:

1. Patient Empowerment: Patients are encouraged to take an active role in their healthcare decisions, informed by their values, preferences, and needs. This often involves educating patients about their conditions and treatment options.

2. Collaboration: Healthcare providers and patients work together as partners. Providers offer their expertise and guidance, while patients bring their personal experience and knowledge of their own health.

3. Shared Decision-Making: Decisions about treatments and care plans are made collaboratively, with healthcare providers and patients contributing equally to the decision-making process.

4. Patient-Centered Care: The focus is on treating the patient as a whole person, not just the disease or condition. This approach takes into account the patient’s lifestyle, emotional, and social needs.

5. Use of Technology: Participatory medicine often involves the use of digital tools and technologies, such as health apps, electronic health records, and online patient communities, to facilitate communication, information sharing, and self-management.

6. Health Literacy and Education: Efforts are made to improve patients’ understanding of their health conditions and the healthcare system, empowering them to make informed choices.

7. Access to Information: Patients have access to their own medical records and other health information, enabling them to be more informed and engaged in their care.

Participatory medicine aims to improve health outcomes by leveraging the unique insights and contributions of each patient, fostering a more personalized and effective healthcare experience.

I too have been using GPT (bard.google.com) for healthcare questions. Not what is participatory medicine, but other questions. One thing that concerns me is the sources used to answer health questions. They are NOT my primary go tos: Mayo Clinic, Cleveland Clinic, NLM.gov, NIH.gov, etc.

This concerns me as the machine learning is “learning” from searches and I envision a funnel effect of what are known as reliable sources (which will leave behind the truly reliable and curated and objective sources). And whether there is a way to give greater weight to reliable sources.

Which makes me wonder whether any health consumers are on the committees that are working on guardrails and ethics. When I look at the answers that I find and the questions that I ask, I wonder whether that is the kind of query that the large healthcare systems and health policy experts are thinking about.

Look forward to your comments.

Hi Beth!

> makes me wonder whether any health consumers

> are on the committees that are working on guardrails and ethics.

Absolutely! I think we’re on the verge of an explosion around this issue. Like, who gets to say what info is trustworthy?? This issue is exactly what sank Oncology Watson: the management decided to do whatever the establishment said, and voila, six years later (and $6 billion!) the news was that Oncology Watson was no better than human doctors. Imagine!

We need governance that recognizes that we consumer-patients are no longer clueless. That model is in the wrong century – literally.

btw, Bard is not ChatGPT – it’s Google’s competitor. Try asking the same questions to chat.openAI.com and let us know if there’s a difference.

I’ve used Bard and had some solid source s cited…that had no connection to the data they were allegedly putting out. But a lay person wouldn’t know that.

Michael!

1. Every patient I work with on such things knows to check every source it gives before using the answer in any serious way! You’re talking like an old school anti-Googling doctor! :-)

2. Curious: Did you get anything better when you tried the same with GPT-4?

ANY reference currently provided by ANY public LLM is potentially a hallucination. It’s that simple.

Excellent – let’s dig into that.

What would make something *not* potentially a hallucination?

Is there any way out of this issue, short of having some authority that we trust absolutely?

There are many ways you can combat hallucinations in AI, and some companies are doing that pretty well. But it’s not easy and is a combination of the training docs you use and prompt engineering. AI gets very complicated very quickly if you’re trying to build human interactions with it that make sense and don’t devolve in unexpected ways.

LLMs and most doctors suffer from the same disease: they don’t say “I don’t know”. So if you ask LLMs to produce content with scientific references it will invent them, instead of saying “I can’t do that” or “I don;t have any”, just as many doctors will misdiagnose someone because they can’t say “I don’t have the knowledge to properly diagnose your ailment”.